Top 100: Passwords

First published on 7 February 2021

Last updated on 8 February 2021

Introduction

In late 2017, Scott Linux published the breachcompilation.txt on Github, a comprehensive word list that comprises about 1.4 billion passwords from all over the world. The uncompressed file is about nine gigabytes in size and was created from 41 gigabytes of Stash (Bitbucket Server) data. In this post, I illustrate a brief analysis of the compilation, using a variety of bash and python commands to handle the relatively large amount of text data. Furthermore, I evaluate my findings and argue that the most reasonable way to use passwords is with the help of password managers like KeePass.

Methodology

Trying to load the text file into Pandas DataFrames, I quickly stretched my high-end consumer laptop with 16 gigabytes of RAM to its limits. In the same way, NumPy failed to load the data into an array. A brief exploration of methods for big data analysis led me to Dask DataFrames, which are parallel DataFrame composed of many smaller Pandas DataFrames, split along the index. However, simple value counting computations made my laptop hit the wall, even though I created a 64 gigabyte swap file to expand the RAM. Finally, I decided to preprocess the text file using bash and to split it into multiple files, which could then be processed using Pandas DataFrames. The following lists contains all preprocessing steps that I made using bash:

- Remove lines containing non-alphanumeric characters:

$ sed -i '/[^[:alnum:]_@]/d' passwd - Sort alphabetically:

$ sort passwd - Remove duplicate lines

$ awk '!visited[$0]++' passwd > passwd - Split into files with a maximum file size of 3333 Mb:

$ split -C 3333m --numeric-suffixes passwd > passwd - Count number of occurrence of each line:

$ uniq -c passwd - Remove leading whitespaces:

$ sed -e 's/^ *//' passwd - Add index line to the beginning of the file:

$ echo 'count password' | cat - passwd > temp && mv temp passwd

Subsequent to the preprocessing followed an analysis of the data using Pandas DataFrames in Python:

- Read each file as a CSV, sort the values in a descending order and write the data to new CSV files.

- Read each CSV file, find the 333 largest value pairs (count, password), and create a DataFrame comprising the largest values of all three files.

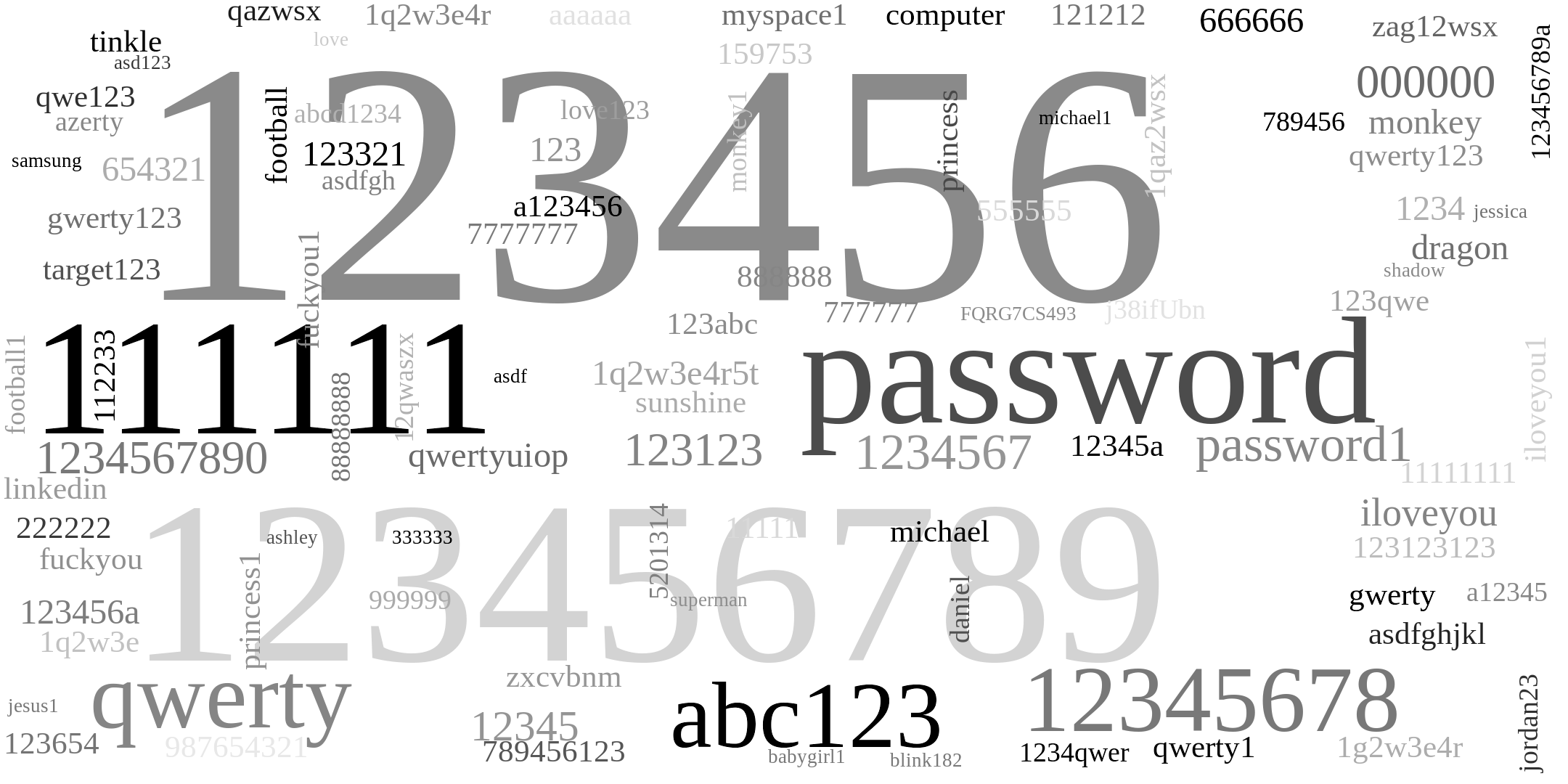

- Again, sort the 999 largest values, find the 100 largest values of the whole compilation, and generate a word cloud from the frequencies.

(The entire code for this post can be found in this Jupyter Notebook.)

Analysis

Let us now take a look at the resulting word cloud:

Conclusion

In a nutshell, almost all passwords that come to our minds also come to the minds of millions of other people around the world. Our passwords are often not as unique as we like to think. At this point, I want to shed some light on the benefits of using password managers. Personally, I use a password manager called KeyPassXC, which allows me to generate very strong passwords for all accounts that I have. All passwords are securely protected by a master key, the only password that I have to remember: iloveyou.